AI tools have become crucial in employment related decisions for companies with high volume hiring. Applications range from resume scanning all the way to testing ‘job fit’ and video interviewing software. This exacerbates discriminatory practices when the tools screen candidates based on protected classes. Instances have been sited in recent news, like the lawsuit filed against Workday Inc.’s for allegedly disqualifying applicants who are Black, disabled, or over the age of 40, or when Amazon had to scrap their AI recruitment software because it had proven to be biased against women.

To protect against discrimination in the application of these systems, the New York City Department of Consumer and Worker Protection released its final rules for Local Law 144 (otherwise known as the NYC hiring bias audit law) in April of this year with an official enforcement date of July 5th (after some delays).

What is Local Law 144?

Employers and vendors will be required to conduct independent bias audits (”impartial evaluation by an independent auditor,” meaning groups not involved in the use, development, or distribution of the tool) for the use of any automated employment decision tools (AEDT). The final rules define AEDT’s as:

“any computational process, derived from machine learning, statistical modeling, data analytics, or artificial intelligence, that issues simplified output, including a score, classification, or recommendation, that is used to substantially assist or replace discretionary decision making for making employment decisions that impact natural persons.”

Key Requirements of the Law:

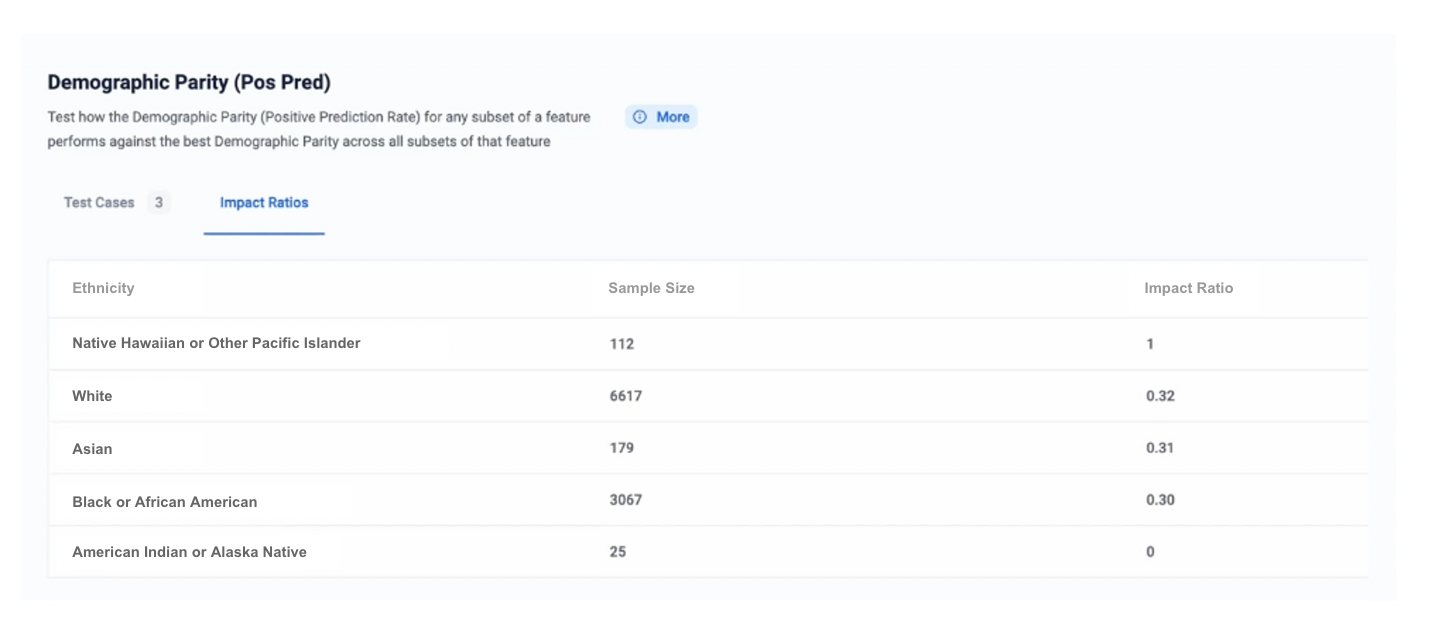

- The bias audit is specifically intended to test a technology for discriminatory impact based on sex, ethnicity, or race. The selection rate for each of these protected classes must be calculated, including the impact ratio of each of the classes and the selection rate of the intersectional categories.

- Employers are required to inform candidates of the use of the tool with ten days advance notice and to offer an alternative selection process or a reasonable accommodation to candidates that request it.

- Once the bias audit is complete, the employer must publish a summary of the audit so that the results are publicly available. After the initial bias audit, the law requires that the audit is conducted annually thereafter.

How to Prepare for the Bias Audit

Ultimately, every company that interviews candidates who reside in NYC is responsible for ensuring that their hiring practices, including the use of third-party software services providers, are unbiased. Companies should engage an independent auditor that can measure and attest to the fairness of any internal AEDT. They should also require any third-party providers to provide similar evidence.

Robust Intelligence can help employers and vendors comply with the requirements of the law with automated testing and document generation.

- The Robust Intelligence platform tests for the selection rate of the protected classes (sex, race, and ethnicity, and intersectional groups of these classes) and includes impact ratios for all features, as required by the law. More information on the tests used to evaluate section rate is below:

Demographic parity - one of the most well-known and strict measures of fairness. It is meant to be used in a setting where we assert that the base label rates between subgroups should be the same, even if empirically they are different. This test works by computing the Positive Prediction Rate (PPR) metric over all subsets of each protected feature (e.g. race) in the data. It raises an alert if any subset has a PPR that is below 80% of the subset with the highest rate. This test is in-line with the Four-Fifths law, a regulation that requires the selection rate (or Positive Prediction Rate) for any race, sex, or ethnic group to be at least four-fifths (80%), of the group with highest rate, or otherwise is treated as evidence of adverse impact.

Intersectional Group Fairness - most existing work in the fairness literature deals with a binary view of fairness, either a particular group is performing worse or not. This binary categorization misses the important nuance of the fairness field - that biases can often be amplified in subgroups that combine membership from different protected groups, especially if such a subgroup is particularly underrepresented in opportunities historically. The intersectional group fairness test uses positive prediction rate to ensure that all subsets in the intersection between any two protected groups perform similarly.

- In addition, our automated model cards feature allows for these test results to be seamlessly formatted into shareable reports that can be exported as PDFs.

With this, we simplify the process for employers and vendors to provide evidence that their tools are not discriminating against protected classes and are compliant with this new NYC law.

Beyond NYC

Other states have begun to address discrimination via AI hiring tools.

- In 2020, Illinois enacted the AI Video Interview Act requiring employers that use AI-enabled analytics in interview videos to comply with specific requirements.

- In 2020, Maryland passed an AI-employment law prohibited employers from using facial recognition technology during an interview.

The Equal Employment Opportunity Commission (EEOC), a federal agency, has also become more involved with protecting citizens from discrimination as a result of the use of AI-powered employment practices by issuing guidance on how employers’ use of AI can comply with the Americans with Disabilities Act and Title VII. In addition, the EEOC joined the DOJ, CFPB, and FTC to release a joint statement on AI and their commitment to “the core principles of fairness, equality, and justice as emerging automated systems…become increasingly common in our daily lives.”

Beyond the use of AI in hiring applications, AI systems have garnered attention from federal agencies and regulatory bodies. This year, the United States’ National Institute of Technology and Standards (NIST) released the the AI Risk Management Framework, and in Europe the AI Act is nearing finalization.

If you are interested to learn more about Robust Intelligence, request a demo here.

.jpg)

.jpg)

.jpg)