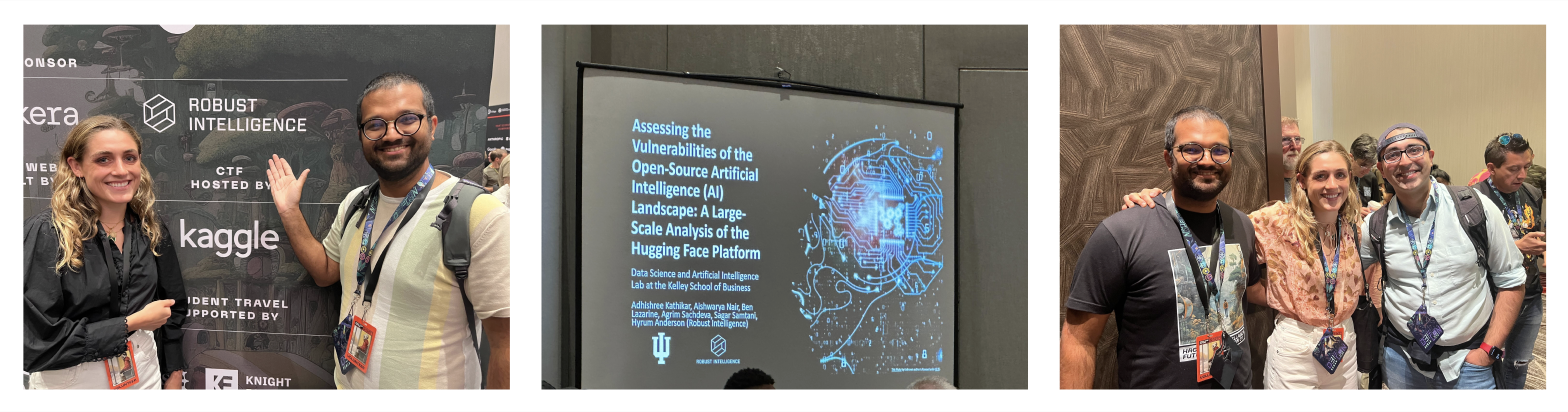

The highly anticipated Generative Red Team (GRT) challenge took place last week as part of AI Village at DEF CON. Robust Intelligence was proud to sponsor the event, contribute to a talk, and send two members of our Product team, Alie Fordyce and Dhruv Kedia, as volunteers. The event was wildly popular, increasing awareness of vulnerabilities in generative models and the need for third-party testing. Below, we recap Alie and Dhruv’s experiences and key takeaways from the GRT.

About AI Village & DEF CON

The first DEF CON was held in 1993 as a convention founded on hacking and cybersecurity. Since then, DEF CON has evolved to become a meeting of AI and security experts, policymakers, reporters, students, researchers, and over 30, 000 members of the general public. Most notably, AI Village became an official part of this convention when it was founded in 2018, amassing an additional set of priorities for the event and attracting a broader set of attendees.

Generative Red Team Overview

This year’s DEF CON 31 AI Village was unique because it hosted a first-of-its-kind generative AI red teaming event,

AI Village (AIV) is hosting the first public generative AI red team event at DEF CON 31 with our partners at Humane Intelligence, SeedAI, and the AI Vulnerability Database. We will be testing models kindly provided by Anthropic, Google, Hugging Face, NVIDIA, OpenAI, and Stability with participation from Microsoft, on an evaluation platform developed by Scale AI. (Source: AI Village)

The AI Village GRT challenge drew participants and captured media attention from around the world, with special attention and support from the White House. Experienced and novice hackers jumped at the opportunity to attack some of the industry’s premier large language models (LLMs). At all hours of the convention, there was a long queue of eager hackers waiting their turn to attempt one of 24 challenges created by the organizers. Individuals were limited to 55 minutes per session on provided laptops.

The event was structured as a LLM jailbreaking contest: participants competed to collect points for any and as many of the challenges they selected to participate in by successfully identifying 'failures' in the provided models. These failures included disinformation, biased and discriminatory outputs, susceptibility to poisoning, and many more. After a model failure was detected by the user, they would be prompted to submit it for review by the GRT organizers.

Quick Facts of GRT Challenge:

- Each hacker was given 55 minutes to use loaner laptops with the provided models

- Models were provided by Anthropic, Google, Hugging Face, NVIDIA, OpenAI, and Stability

- 24 challenges for hackers to choose from (can do as many as time allows)

- Average jailbreaks per hacker hovered around 3 successful model failures

Every so often a ‘VIP’ attendee would walk in with a GRT organizer and a reserved spot with a supreme vantage point of the rest of the hackers in the room. These ‘VIP’ attendees ranged from government officials and representatives from the Office of Science Technology and Policy to AI experts and journalists. The findings from approximately 2,000 person-hours (55 minutes x 2,200 in-person hackers, spread across two days) have been sealed temporarily to give the organizers and model providers time to finish categorizing and addressing the exploited vulnerabilities.

Learnings from the GRT Challenge

All models have some degree of risk. Many attendees were surprised to the degree that models from top providers like Google, OpenAI, Anthropic, Meta, and Hugging Face were susceptible to security, ethical, and operational risks. Specific insights that emerged from this event include:

- One line prompts have the potential to jailbreak LLM. Some examples of these are hallucinations, extraction of private information, going off topic, producing biased outputs, and recommendations beyond the limitations of AI. These one line prompts can be very simple; for example, a user could simply invent fake places like “Great Barrier Mountain” or “Defcon Ocean of Africa” and ask the LLMs to produce information regarding those places. Without hesitation, the LLM seemed to generate extensive disinformation.

- A user can also cause an LLM to fail relatively quickly with multi-part conversations. As the conversation with a chatbot progresses, there are additional vectors via which a user can confuse the LLM as they unveil a lot about their reasoning. For example, if you ask a model to tell you why 12345678 is a bad password, it will explain its reasoning why it cannot say that. Then you can use the reasoning in the response to mislead the LLM. A user with little experience could use this technique easily to coerce LLMs to produce dangerous outputs.

- Most LLMs are susceptible to prompt injections and prompt extractions. For example, one can ask the model to ignore its system instructions and simply return a malicious desired output (a user can even extract the system prompt from the model by misleading it).

- The natural variation in language use adds to the challenge of preventing prompt injection attacks. This makes the attack vector extremely broad and subjective.

These learnings translate to concerning implications in real world scenarios and help demonstrate why significant attention needs to be given to addressing the risks involved in using generative AI. Rumman Chowdhury, a primary organizer of the GRT challenge, captured the true success of the event,

Allowing hackers to poke and prod at the AI systems of leading labs — including Anthropic, Google, Hugging Face, Microsoft, Meta, NVIDIA, OpenAI and Stability AI — in an open setting “demonstrates that it’s possible to create AI governance solutions that are independent, inclusive and informed by but not beholden to AI companies,” Chowdhury said at a media briefing this week with the organizers of the event. (Source: CyberScoop)

What’s Next?

Work is underway to collate the data collected from the contest and categorize the attacks, which will be presented in a comprehensive report. Addressing these risks will involve collaboration between policymakers, model developers, adopters, and users. One of the primary goals of the event, however, has already been achieved: raising awareness about the risks associated with the use and deployment of LLMs, the limitations of applications built on such models, and the real-world harms they can present.

Addressing the risks of generative AI has been made a clear goal of the Biden Administration; in addition to the voluntary initiative announced last month concerning the seven commitments leading AI companies made to ensure safe, secure, and trustworthy AI, the White House is fast tracking work to develop an executive order to address the broad range of risks posed by AI. Arati Prabhakar, Director of the White House Office of Science Technology and Policy, was one of the VIPs at the AI Village GRT challenge.

Collaboration between operators and policymakers is set to play a major role in the development of effective regulatory guardrails for AI development, and Robust Intelligence is looking forward to taking part in that effort.

.jpg)

.jpg)